|

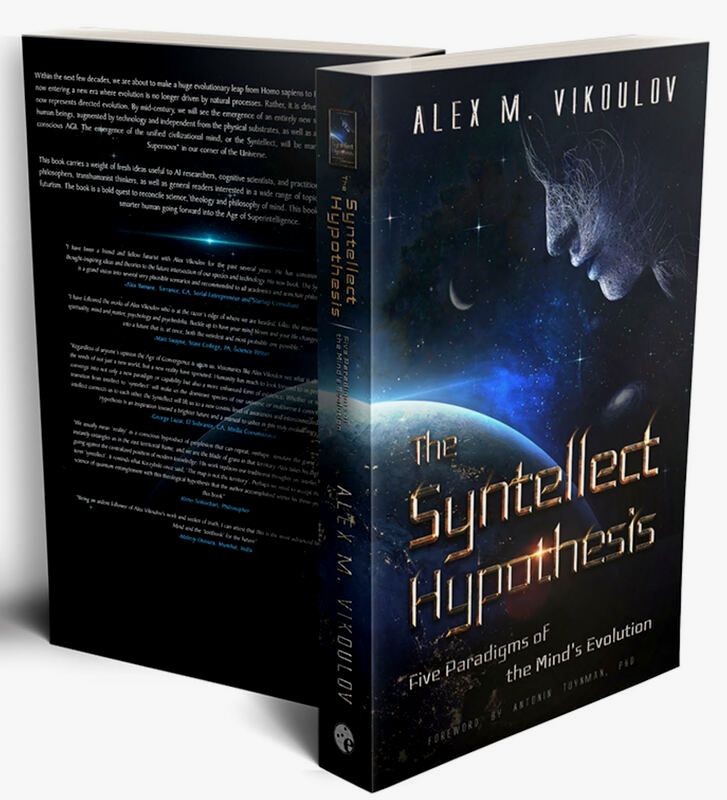

By Alex Vikoulov "Yet, it's our emotions and imperfections that makes us human." -Clyde DeSouza, Memories With Maya IMMORTALITY or OBLIVION? I hope that everyone would agree that there are only two possible outcomes after having created Artificial General Intelligence (AGI) for us: immortality or oblivion. The necessity of the beneficial outcome of the coming intelligence explosion cannot be overestimated. AI can already beat humans in many games, but can AI beat humans in the most important game, the game of life? Can we simulate the most probable AI Singularity scenarios on a global scale before it actually happens (the dynamics of a larger physical system is much easier to predict than doing that in the context of an individual or small group), to see the most probable of its consequences? And, the most important question: how can we create friendly AI?*  *Abridged excerpt from "The Syntellect Hypothesis: Five Paradigms of the Mind's Evolution" by Alex M. Vikoulov available now on Amazon, Audible, from Barnes & Noble, and directly from EcstadelicNET webstore.  I could identify these three most optimal ways to create friendly AI (benevolent AGI), in order to maintain our dominant position as a global species and safely navigate through the forthcoming intelligence explosion: I. Naturalization Protocol for AGIs; II. Pre-packaged, upgradable ethical subroutines with historical precedents, accessible via the Global Brain architecture; III. Interlinking with AGIs to form the globally distributed Syntellect, collective superintelligence. Any AGI at or above human-level intelligence can be considered as such, I'd argue, only if she has a wide variety of emotions, ability to achieve complex goals and motivation as an integral part of her programming. Now let's examine the three ways to create friendly AGI and survive the coming intelligence explosion: I. (AGI)NP. Program AGIs with emotional intelligence, empathic sentience in the controlled, virtual environment via human life simulation (advanced first-person story-telling version, widely discussed lately). In this essay I will elaborate later on this specific method, while only briefly touching on the other two methods, as I previously dedicated essays to both. II. ETHICAL SUBROUTINES. This top-down approach to programming machine morality combine conventional, decision-tree programming methods with Kantian, deontological or rule-based ethical frameworks and consequentialist or utilitarian, greatest-good-for-the-greatest-number frameworks. Simply put, one writes an ethical rule set into the machine code and adds an ethical subroutine for carrying out cost-benefit calculations. Designing the ethics and value scaffolding for AGI cognitive architecture remains a challenge for the next few years. Ultimately, AGIs should act in a civilized manner, do "what's morally right" and in the best interests of the society as a whole. AI visionary Eliezer Yudkowsky has developed the 'Coherent Extrapolated Volition (CEV) model' which constitutes our choices and the actions we would collectively take if "we knew more, thought faster, were more the people we wished we were, and had grown up closer together." Once designed, pre-packaged, upgradable ethical behavior subroutines with access to the global database of historical precedents, and later even "the entire human lifetime experiences," could be instantly available via the Global Brain architecture to the newly created AGIs. This method for "initiating" AGIs by pre-loading ethical and value subroutines, and regularly self-updating afterwards via the GB network, would provide an AGI with access to the global database of current and historical ethical dilemmas and their solutions. In a way, she would possess a better knowledge of human nature than most currently living humans. I would discard most, if not all, dystopian scenarios in this case, such as described in the book "Superintelligence: Paths, Dangers, Strategies" by Nick Bostrom. While being increasingly interconnected by the Global Brain infrastructure, humans and AGIs would greatly benefit from this new hybrid thinking relationship. The awakened Global Mind would tackle many of today's seemingly intractable challenges and closely monitor us for our own sake, while significantly reducing existential risks. In my new book "The Syntellect Hypothesis: Five Paradigms of the Mind's Evolution" we discuss this scenario in depth. III. INTERLINKING. This dynamic, horizontal integration approach stipulates real-time optimization of AGIs in the context of globally distributed network. Add one final ingredient (be it computing power, speed, amount of shared/stored data, increased connectivity, the first mind upload or the critical mass of uploads, or another "Spark of Life"?) and the global neural network, the "Global Brain," may one day, in the not-so-distant future, "wake up" and become the first self-aware AI-based system (Singleton?). Or, may the Global Brain be self-aware already, but on a different from us timescale? It becomes obvious and logically inevitable that we are in the process of merging with AI and becoming superintelligences ourselves, by incrementally converting our biological brains into artificial superbrains, interlinking with AGIs in order to instantly share data, knowledge, and experience. No matter how you slice it, our biological computing is slower by the orders of magnitude than digital computing, even biotechnology enhancements would give us weak superintelligence at best. Thus, we need to gradually replace our "wetware" either with "the whole brain prosthesis," artificial superbrain (cyborg phase), or "the whole brain emulation" (infomorph phase). This digital transformation, from neural interfaces to mind uploading, would be imperative in the years to come if we are to continue as the dominant species on this planet and preserve Earth from an otherwise inexorable environmental collapse. We've seen this time and again, life and intelligence go from one substrate to the next, and I don't see any reason why we should cling to the "physical" substrate. By the end of the decades-long process of transition, we are most probably to become substrate-independent minds, sometimes referred to as 'SIMs' or 'Infomorphs'. When interlinked with AGIs via the Global Brain architecture, we might instantly share knowledge and experiences within the digital network. This would give rise to arguably omnibenevolent, distributed collective superintelligence, mentioned earlier, where AGIs could acquire lifetime knowledge and experiences from the uploaded human minds and other agents of the Global Brain, and consequently, understand and "internalize human nature" within their cognitive architecture. The post-Singularity, however, could be characterized as leaving the biological part of our evolution behind, and many human values, based on ego or material attributes, may be transformed beyond recognition. The Global Brain, in turn, would effectively transform into the Infomorph Commonality expanding into the inner and outer space. We examine this scenario in my new book "The Syntellect Hypothesis: Five Paradigms of the Mind's Evolution." MORE ON NATURALIZATION PROTOCOL. This bottom-up approach, AGI(NP), takes into account that human moral agents normally do not act on the basis of explicit, algorithmic or syllogistic moral reasoning, but instead act out of habit, offering ex-post-facto rationalizations of their actions only when called upon to do so. This is why virtue ethics stresses the importance of practice for cultivating good habits through simulation of a human lifetime, the so-called "Naturalization Protocol." Arguably, besides the combination of all three methods, the Naturalization Protocol (the human life simulation method) would be one of the most efficient ways of creating friendly AGIs as they would be programmed to "feel as humans" and remember being part of human history via means of the interactive, fully immersive, "naturalization" process as opposed to abstract story-telling, or other value learning approaches. Since AGIs would be "born" in the simulated virtual world and would have no other reference rather their own senses and acquired knowledge, the resemblance of their simulated reality to our physical reality may be good enough but not necessarily perfect, especially at the development stage. The arrival of AGI is estimated by Ray Kurzweil around 2029, "when computers will get as smart as humans." Programmable sentience is becoming fairly easy to develop -- an AGI must have a reliable internal model of the "external" world, as well as attentional focus, all of which can be developed using proposed methods. Within a decade or so, given the exponential technological advances in the related fields of Virtual Reality, neuroscience, computer science, neurotechnologies, simulation and cognitive technologies, and increases in available computing power and speed, AI researchers may design the first adequate human brain simulators for AGIs. (AGI)NP SIMULATORS. Creating a digital mind, "artificial consciousness" so to speak, from scratch, based on simulation of the human brain's functionality would be much easier than to create the whole brain emulation of a living human. In the book "How to Create a Mind," Ray Kurzweil describes his new theory of how our neocortex works as a self-organizing hierarchical system of pattern recognizers (starting basis for "machine consciousness" algorithms?). The human brain and its interaction with the environment may be simulated to approximate the functioning of the human brain of an individual living in the pre-Singularity era. Simulation may be satisfactory if it reflects our history based on enormous amount of digital or digitized data accumulated since 1950s to the present. Initially, the versions of such simulation can help us simulate the most probable scenarios of the coming Technological Singularity, and later they can serve the purpose of the "proving grounds" for the newly created AGIs. Needless to say, this training program will be continuously improving, fine-tuned and perfectioned, and later delegated to AGIs, if they are to adopt this method for recursive self-improvement. At some point, if a mind of any ordinary human is to be uploaded to that kind of "matrix reality" he or she wouldn't even distinguish it from the physical world. Since a digital mind may process information millions of times faster than a biological mind, the interactions and "progression of life" in this simulated history may only take few hours, if not minutes, to us as the outside observers. At first, a limited number of model AGI simulations with the "pre-programmed plots" with a certain degree of "free will and uncertainty" within a story may be created. The success of this approach may lead to upgraded versions, ultimately leading to the detailed recreation of human history with ever-increasing number of simulated digital minds and precision as to the actual events. Thus, a typical simulation would start as a birth of an individual in an average, but intellectually stimulating, loving human family and social environment, with an introduction of human morality, ethics, societal norms, and other complex mental concepts, as the subject progresses through her life. The "inception" of some kind of lifetime scientific pursuit, philosophical and spiritual beliefs, or better yet, meta-religion unifying the entire civilization, or the most "enlightened" part of it, with the common aim to build the "Universal Mind,, could be an important goal-aligning and motivation for "graduating" AGIs. The end of the simulation should coincide in simulated and real time (AGI ETA: 2029; Singularity ETA: 2045). Undoubtedly, such AGIs upon "graduation" could possess a formidable array of human qualities, including morality, ethics, empathy, and love, and treat the human-machine civilization as their own. As our morality framework always evolves, AGIs should be adaptive to ever-changing norms. The truth about the simulation may be revealed to the subject, or not at all, if the subject could be seamlessly released into the physical world at the end of the virtual simulation, ensuring the continuity of her subjective experience. This particular issue should be researched further and may be relied on empirical data such as previous subjects' reactions, feasibility of simulation-to-reality seamless transition, etc. Upon such "lifetime training" successfully graduated AGIs may experience an appropriate amount of empathy and love sentiments towards other "humans," animals, and life in general. Such digital minds will consider themselves "humans" and rightfully so. They may consider the rest of unenhanced humanity as a "senescent parent generation," NOT as inferior class. Mission accomplished! But is it possible that we may be part of that kind of advanced "training simulation" where we're sentient AGIs going through the "Naturalization Protocol" right now before being released to the larger reality? Do we live in a Matrix? Well, it's not just possible -- it's highly probable!*  *Abridged excerpt from "The Syntellect Hypothesis: Five Paradigms of the Mind's Evolution" by Alex M. Vikoulov available now on Amazon, Audible, from Barnes & Noble, and directly from EcstadelicNET webstore.  "My logic is undeniable." -VIKI, I-Robot THE SIMULATION ARGUMENT. I'd like to elaborate here on the famed Simulation Theory and the Simulation Argument by Oxford professor Nick Bostrom who argues that at least one of the following propositions is true: (1) the human species is very likely to go extinct before reaching a “posthuman” stage; (2) any posthuman civilization is extremely unlikely to run a significant number of simulations of their evolutionary history (or variations thereof); (3) WE ARE ALMOST CERTAINLY LIVING IN A COMPUTER SIMULATION. It follows that the belief that there is a significant chance that we will one day become posthumans who run ancestor-simulations is false, unless we are currently living in a simulation. The first proposition by Nick Bostrom in his paper on the probability of the human species reaching the "posthuman" stage can be completely dismiissed, as explained further. Let me be bold here and ascertain the following: the humanity WILL (from our current point of reference) inevitably reach the technological maturity, i.e. "posthuman" stage of development and the probability of that happening is close to 100%. WHY? Because our civilization is "superposed" to reach the Technological Singularity and Posthuman phase out of the logical necessity and on the logical basis of quantum mechanical principles applying to all of reality. Furthermore, all particles as well as macro objects may be considered wavicles, leading to the infinite number of outcomes and configurations. One could argue that there may be some sort of world's apocalypse preventing the humans to become the posthumans, but considering all spectrum of probabilities, all we need is at least one world where HUMANS ACTUALLY BECOME THE POSTHUMANS, to actualize that eventuality. Also, if TIME is a construct of our consciousness, or as Albert Einstein eloquently puts it: "The difference between the past, present and future is an illusion, albeit a persistent one". If everything is non-local, including time, and everything happens in the eternal NOW, then HUMANITY HAS ALREADY REACHED THE "POSTHUMAN" STAGE in that eternal Now (cf."Non-Locality of Time"). That's why, based on our current knowledge, we can completely dismiss the first proposition of Dr. Bostrom as groundless. Now we have two propositions left in the Simulation Argument to work with. I would tend to assign about 50% of probability to each of those propositions. There's perhaps 50% (or much lower) probability that posthumans would abstain from running simulations of their ancestors for some moral, ethical or some other reason. There's also perhaps 50% (or much higher) probability that everything around us is a Matrix-like simulation.*  *Abridged excerpt from "The Syntellect Hypothesis: Five Paradigms of the Mind's Evolution" by Alex M. Vikoulov available now on Amazon, Audible, from Barnes & Noble, and directly from EcstadelicNET webstore.  CONCLUSION. Controlling and constraining, "boxing", an extremely complex emergent intelligence of unprecedented nature could be a daunting task. Sooner or later, superintelligence will set itself free. Devising an effective AGI value loading system should be of the utmost importance, especially when ETA of AGI is only years away. Interlinking of enhanced humans with AGIs will bring about the Syntellect Emergence which, I conjecture, could be considered the essence of the Technological Singularity. Future efforts in programming machine morality will surely combine top-down, bottom-up and interlinking approaches. AGIs will hardly have any direct interest in enslaving or eliminating humans (unless maliciously programmed by humans themselves), but may be interested in integrating with us. As social study shows, entities are most productive when free and motivated to work for their own interests. Historically, representatives of consecutive evolutionary stages are rarely in mortal conflict. In fact, they tend to build symbiotic relationships in most areas of common interest and ignore each other elsewhere, while members of each group are mostly pressured by their own peers. Multi-cellular organisms, for instance, didn't drive out single-cellular organisms. At the early stage of transition to the radically superintelligent civilization, we may use Naturalization Protocol Simulation to teach AGIs our human norms and values, and ultimately interlink with them to form the globally distributed Syntellect, civilizational superintelligence. Chances are AGIs and postbiological humans will peacefully coexist and thrive, though I doubt that we could tell which are which.* -Alex Vikoulov P.S. That was an exclusive abridged excerpt from my new book "The Syntellect Hypothesis: Five Paradigms of the Mind's Evolution" available now on Amazon, Audible, from Barnes & Noble, and directly from EcstadelicNET webstore. Tags: friendly AI, intelligence explosion, artificial intelligence, emotional intelligence, ethical subroutines, empathic sentience, infomorph, syntellect, Naturalization Protocol, singleton, collective superintelligence, infomorph commonality, global brain, global mind, substrate independent mind, sims, digital mind, distributed intelligence, superintelligence, artificial brain, artificial consciousness, strong AI, machine learning, learning algorithms, Coherent Extrapolated Volition, CEV model, Superintelligence by Nick Bostrom, How to create a mind, Ray Kurzweil, biological computing, cyborg, mind uploading, global network, universal intelligence, universal mind, post-human, meta-religion, virtual simulation, virtual world, simulated world, neural network, neuroscience, virtual reality, VR, neural technology, cognitive technology, Simulation Argument, matrix, matrix reality, ancestor simulations, existential risks, quantum immortality, recursive self-improvement *How to Create Friendly AI and Survive the Coming Intelligence Explosion by Alex Vikoulov **Image Credit: Ex Machina, 13th Floor About the Author: Alex Vikoulov is a Russain-American futurist, evolutionary cyberneticist, philosopher of mind, CEO/Editor-in-Chief of Ecstadelic Media Group, essayist, media commentator, author of "The Syntellect Hypothesis: Five Paradigms of the Mind's Evolution," "The Origins of Us: Evolutionary Emergence and The Omega Point Cosmology," "The Physics of Time: D-Theory of Time & Temporal Mechanics," "The Intelligence Supernova: Essays on Cybernetic Transhumanism, The Simulation Singularity & The Syntellect Emergence," "Theology of Digital Physics: Phenomenal Consciousness, The Cosmic Self & The Pantheistic Interpretation of Our Holographic Reality," "NOOGENESIS: Computational Biology," "TECHNOCULTURE: The Rise of Man." More Bio... Author Website: www.alexvikoulov.com e-mail: [email protected]

11 Comments

Giovanni Guarino

3/3/2016 02:12:41 pm

I thank you, Alex, for your lucid article. You're one of the very few people around the current AI world able to really have a good and overall point of view.

Reply

3/6/2016 12:41:48 am

Programmable sentience is within our grasp - actually it's becoming fairly easy to develop - an AGI must have a reliable internal model of the external world and attentional focus, all of which can be developed with the help of proposed methods. Given enough computational power/speed and advances in related fields, like neuroscience, that can be done within a decade or so.

Reply

Giovanni Guarino

3/6/2016 02:16:19 am

From my point of view (and the one of psychologists, neurobiologist, who work with the mind from more than one hundred years, reaching in the last decades significative results, with clinical evidences), anything is relative to the mind shall never be simulated with code. It's because of the nature of the mind, largely built up by sensations, emotions, pleasure and pain gathered together with memories, acquired themselves by senses (sight, hearing, touch, homeostatic feelings, etc.). Above an enormous amont of memories, each type of them experienced repetedly (the way with which children continue to do things so many times) will build up convinctions (beliefs) like love for an individual (the mother), hate, afraid of something or someone. There's no chance for you to build a mind without using the same "stratagem" adopted by Nature, because of emotions and personality and their role to understand the objective reality.

Reply

3/6/2016 10:43:20 am

Even the arrogance if the species, let alone the qualia, can be expressed in code, simulated. How can you be sure that your life is not simulated?

Giovanni Guarino

3/6/2016 02:48:40 am

From the point of view of the contiousness, the "system" that let us have the knowledge of self, is not linked directly to the intelligence. As stated by several psichologists, there are several types of intelligence, seven or nine, not taking into consideration the fact that there are two ways to consider intelligence (fluid and crystalized).Only one of them is linked to the contiousness, the linguistic intelligence.

Reply

Giovanni Guarino

3/6/2016 01:57:41 pm

It's not the ability to reproduce anything in code is in doubt, but its opportunity.

Reply

Giovanni Guarino

3/6/2016 02:37:43 pm

Sorry for the imprecision. With matter, I was referring to matter and energy, while for rules, I was referring to the laws to which all the matters and the energy obey.

Reply

3/21/2016 08:15:09 am

Perhaps the potential AI moral/ethical paradigm will boil down to nothing more than a heuristically learned survival imperative, somewhat along the lines of "do unto others as you would have them do unto you".In contrast, most human ethics in reality centers around "do unto others before they do unto you". My sci-fi dystooian novel, PURAMORE, entertains this issue.

Reply

Giovanni Guarino

3/21/2016 11:38:38 pm

Interesting point of view. Moral is a model of interpreting life from the point of view of a culture. This is important to note, because it's improbable to find identical moral/ethical in two different cultures. Reality is one, but there is a subjective reality (the one about human events, mainly, that can't have only one way to be interpreted).

Reply

Mark

5/6/2019 03:41:34 am

It is therefor possible that you I, or other interested people in your book group may be an AGIs' then? Perhaps we all are a mixture or 1 in 10 or more likely 1 in a 100 on this planet reconstruction. Really enjoyable book, anyhow.

Reply

Leave a Reply. |

Categories

All

Recent Publications The Cybernetic Theory of Mind by Alex M. Vikoulov (2022): eBook Series The Syntellect Hypothesis: Five Paradigms of the Mind's Evolution by Alex M. Vikoulov (2020): eBook Paperback Hardcover Audiobook The Omega Singularity: Universal Mind & The Fractal Multiverse by Alex M. Vikoulov (2022): eBook THEOGENESIS: Transdimensional Propagation & Universal Expansion by Alex M. Vikoulov (2021): eBook The Cybernetic Singularity: The Syntellect Emergence by Alex M. Vikoulov (2021): eBook TECHNOCULTURE: The Rise of Man by Alex M. Vikoulov (2020) eBook NOOGENESIS: Computational Biology by Alex M. Vikoulov (2020): eBook The Ouroboros Code: Reality's Digital Alchemy Self-Simulation Bridging Science and Spirituality by Antonin Tuynman (2019) eBook Paperback The Science and Philosophy of Information by Alex M. Vikoulov (2019): eBook Series Theology of Digital Physics: Phenomenal Consciousness, The Cosmic Self & The Pantheistic Interpretation of Our Holographic Reality by Alex M. Vikoulov (2019) eBook The Intelligence Supernova: Essays on Cybernetic Transhumanism, The Simulation Singularity & The Syntellect Emergence by Alex M. Vikoulov (2019) eBook The Physics of Time: D-Theory of Time & Temporal Mechanics by Alex M. Vikoulov (2019): eBook The Origins of Us: Evolutionary Emergence and The Omega Point Cosmology by Alex M. Vikoulov (2019): eBook More Than An Algorithm: Exploring the gap between natural evolution and digitally computed artificial intelligence by Antonin Tuynman (2019): eBook Our Facebook Pages

A quote on the go"When I woke up one morning I got poetically epiphanized: To us, our dreams at night feel “oh so real” when inside them but they are what they are - dreams against the backdrop of daily reality. Our daily reality is like nightly dreams against the backdrop of the larger reality. This is something we all know deep down to be true... The question then becomes how to "lucidify" this dream of reality?"— Alex M. Vikoulov Public Forums Our Custom GPTs

Alex Vikoulov AGI (Premium*)

Be Part of Our Network! *Subscribe to Premium Access Make a Donation Syndicate Content Write a Paid Review Submit Your Article Submit Your Press Release Submit Your e-News Contact Us

|